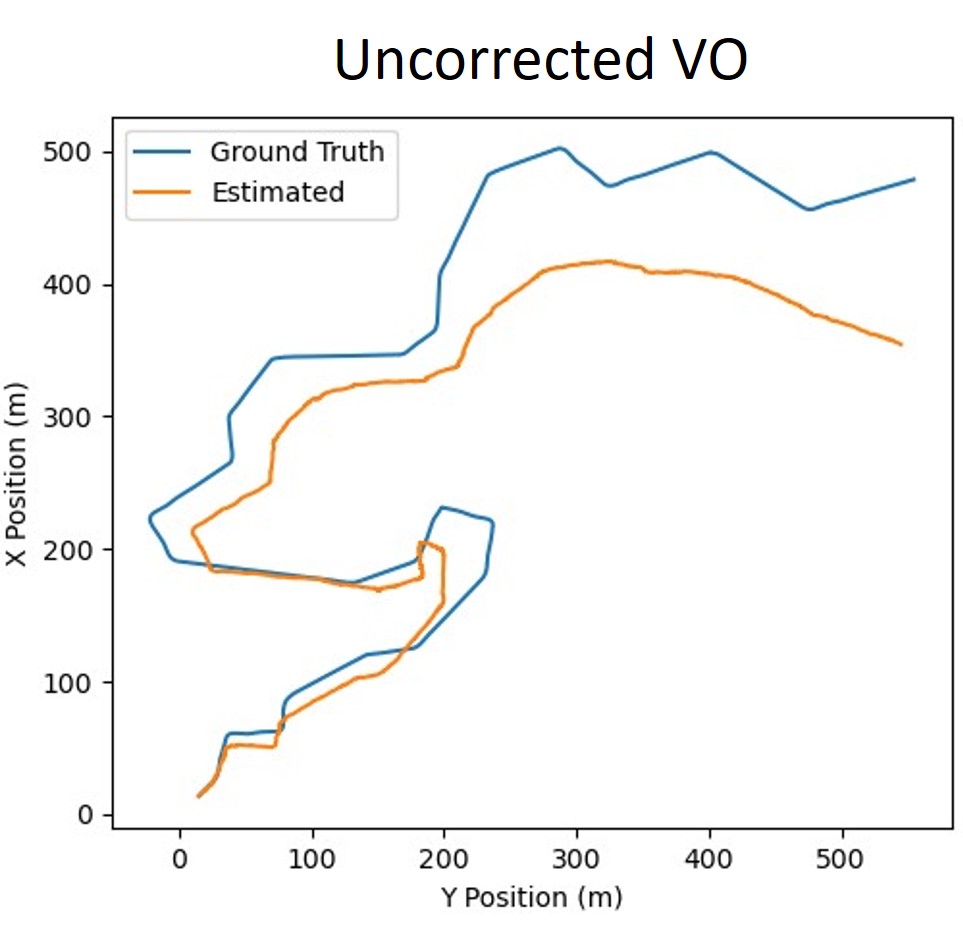

The pose of a drone is composed of its position and orientation in 3D space. Given the initial pose of the drone, a VO system can be use to compute the pose of the drone at the next time step using the images at both the current and next time step. It accomplishes this by finding matching keypoints between images and using the change in position of those features to compute the change in pose of the camera. In this project, the ORB feature extractor is used to obtain the image features. By adding each of the pose changes across a range of image pairs up to the current time step in the flight sequence to the initial drone pose, the current pose of the drone can be estimated. The challenge with VO is that there is a small error in the pose change estimation at each time step. When adding the pose changes across a long sequence, these errors accumulate and lead to substantial drift in pose estimate of the drone from the ground truth. This project uses machine learning models to detect the reduce the error in the pose change estimate at each time step.

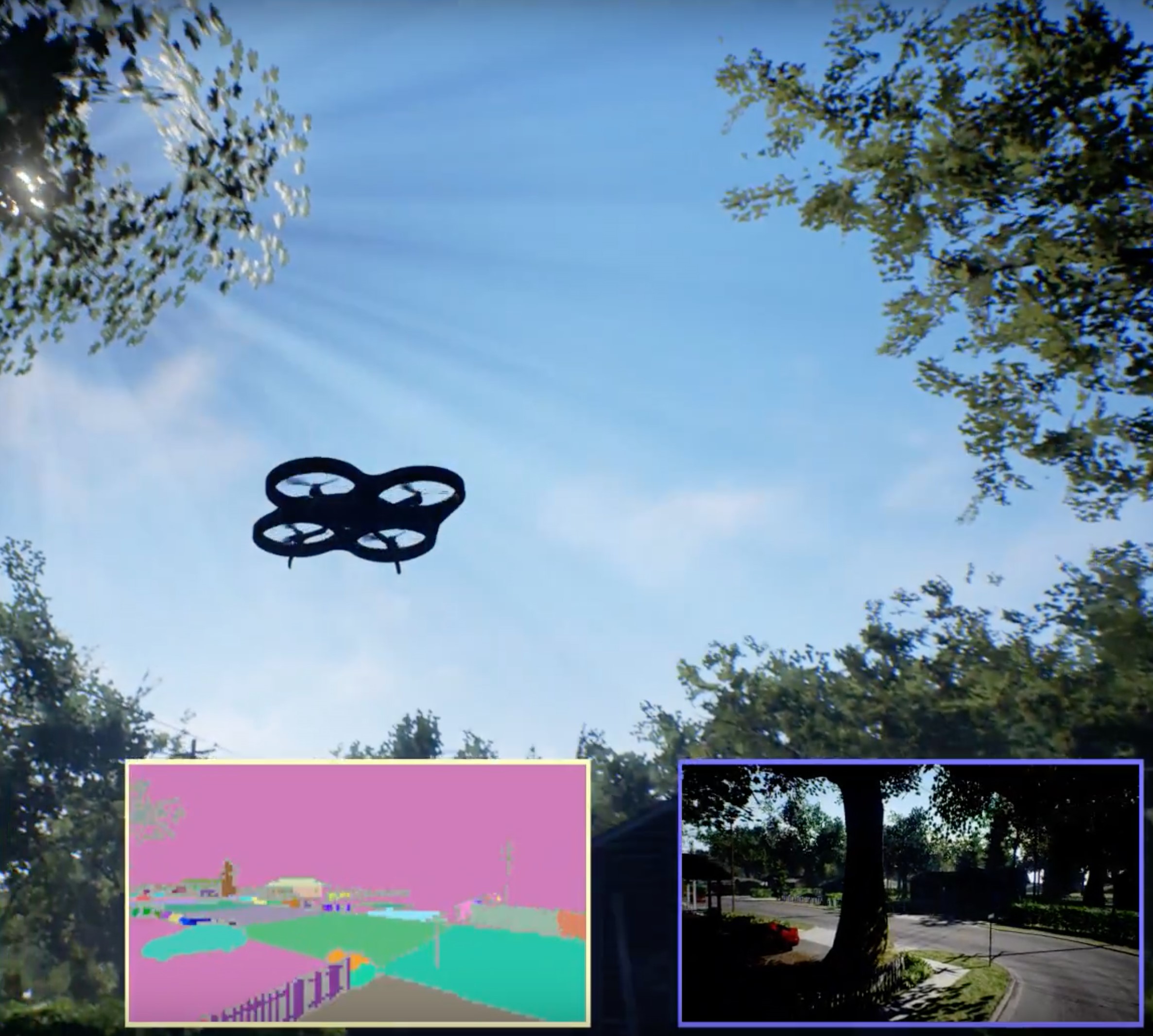

In order to collect image and training data for this project, a simulation was performed using AirSim, Unreal Engine 4 and the PX4 flight control software. Unreal Engine 4 was used to generate the photorealistic environments in which the drone flies and AirSim was used to place a drone in the environment with an interface to capture image data from the drone's camera. PX4 is a commonly used flight controller and was used in the simulation to control the drone and collect telemetry from the drone's onboard sensors, such as GPS and IMU data. Visual odometry was applied to the image data catpured during the simulation to generate the estimated drone paths. The pose estimate of the drone generated by the internal state estimator using GPS is treated as the ground truth pose of the drone.

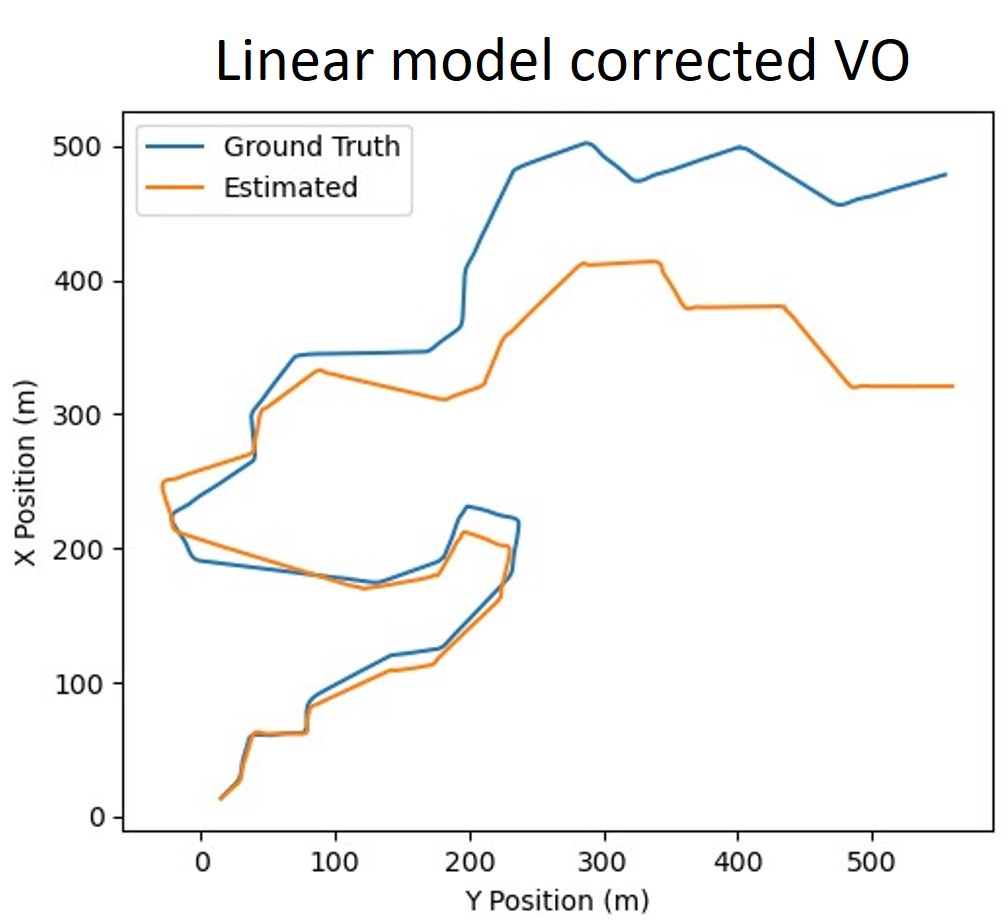

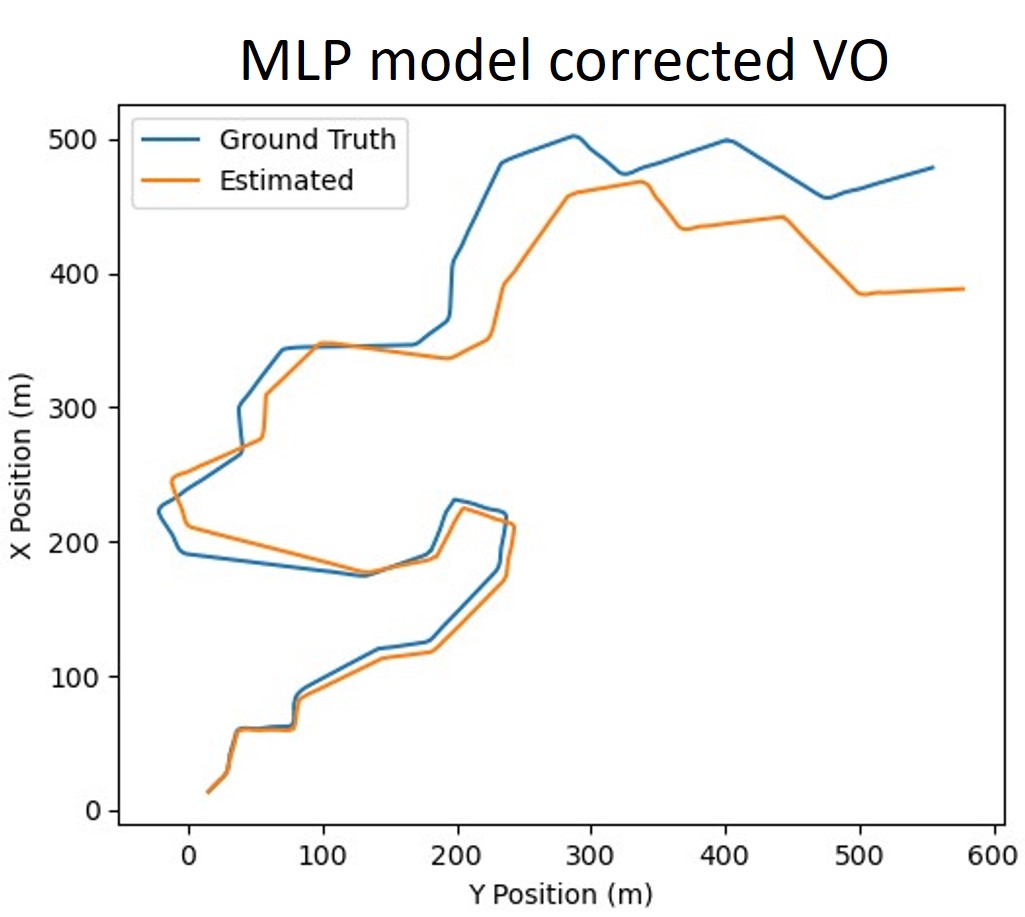

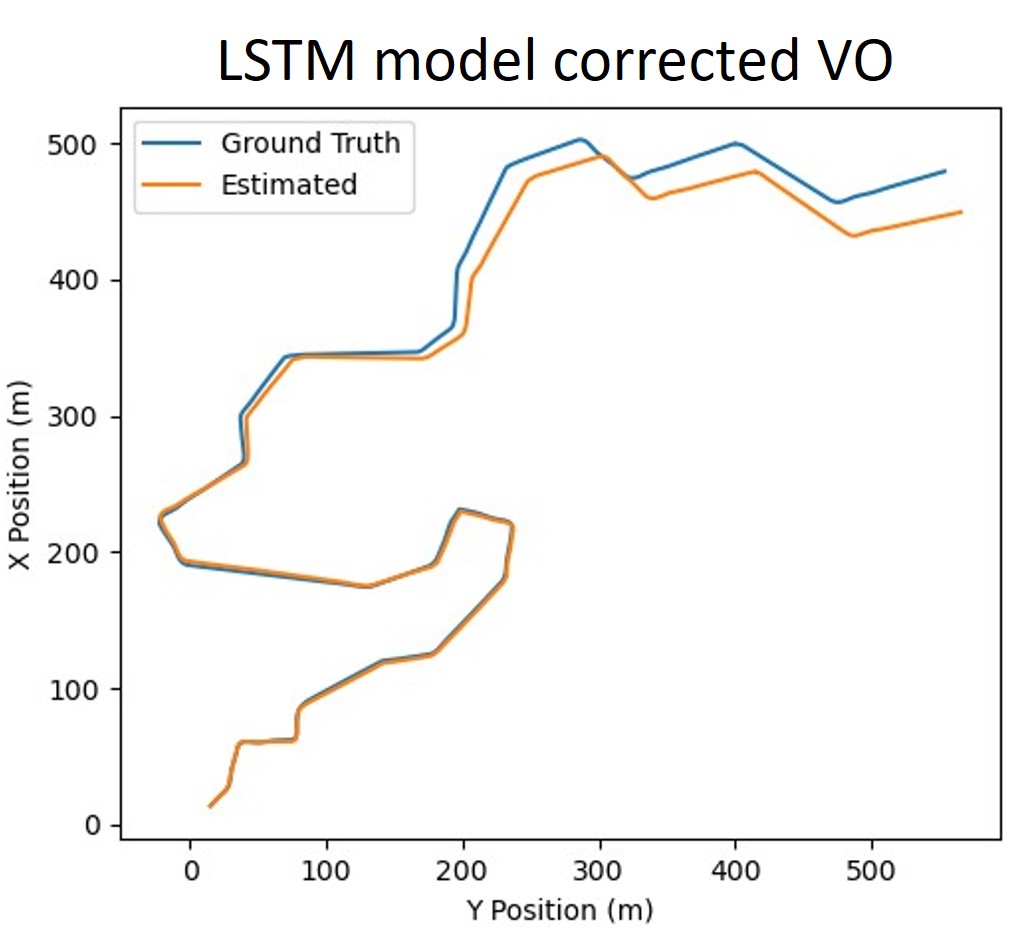

Three different types of models were developed for this project. Firstly, a linear regression model using L2 normalization was developed as a baseline. Next, a multilayer perceptron (MLP) model was also developed to capture more complex non-linearities across a single time step. Finally, a long short-term memory (LSTM) model was created to take advantage of the time-series nature of the data. The models all made use of similar input features - namely the VO estimated pose, IMU data, statistics describing the image keypoint motion, actuator controls and motor control signals. The models were regressed against the ground truth pose of the drone at each time step. The linear model and MLP used features only from the previous time step while the LSTM made use of features several timesteps into the past. The scikit-learn machine learning library and PyTorch deep learning library were used to develop the models. The corrected VO path of the drone in XY horizontal plane using the various models can be seen in the plots below.